1) The “studio” standard: direction + consistency + finishing

Great voice generation isn’t just realism—it’s repeatability. The most common failure mode in 2026 is not “bad audio”; it’s audio that drifts across episodes, languages, or product screens. ElevenLabs is strongest when you treat it as a studio workflow: you define a voice identity, you direct it with consistent notes, and you finish with subtle production choices.

2) Choose your workflow (and avoid redoing work)

- Voiceover / narration: explainers, courses, audiobooks, podcasts, long‑form YouTube.

- AI Dubbing: training libraries, product marketing, support videos, accessibility.

- Product voice: in‑app guidance, IVR, assistants, notifications.

Each workflow needs a different “definition of done”. Narration needs clarity over hours; dubbing needs timing and terminology; product voice needs short responses that feel consistent and on‑brand.

3) Voice Design v3: how to build a voice identity that holds up

Voice Design v3 is best approached like casting. Don’t hunt for a single prompt—build a voice identity in layers:

- Role: who is speaking, to whom, in which context?

- Energy envelope: calm vs. energetic; avoid extremes unless you need them.

- Pace and pauses: short phrases need natural breathing space; long narration needs predictable rhythm.

- Glossary: brand names, acronyms, product terms. Reuse it everywhere.

Before you render 30 minutes, test the voice on three “anchor lines”: a short hook, a mid‑length explanation, and a CTA. If those three lines sound consistent, you’re ready for long‑form production.

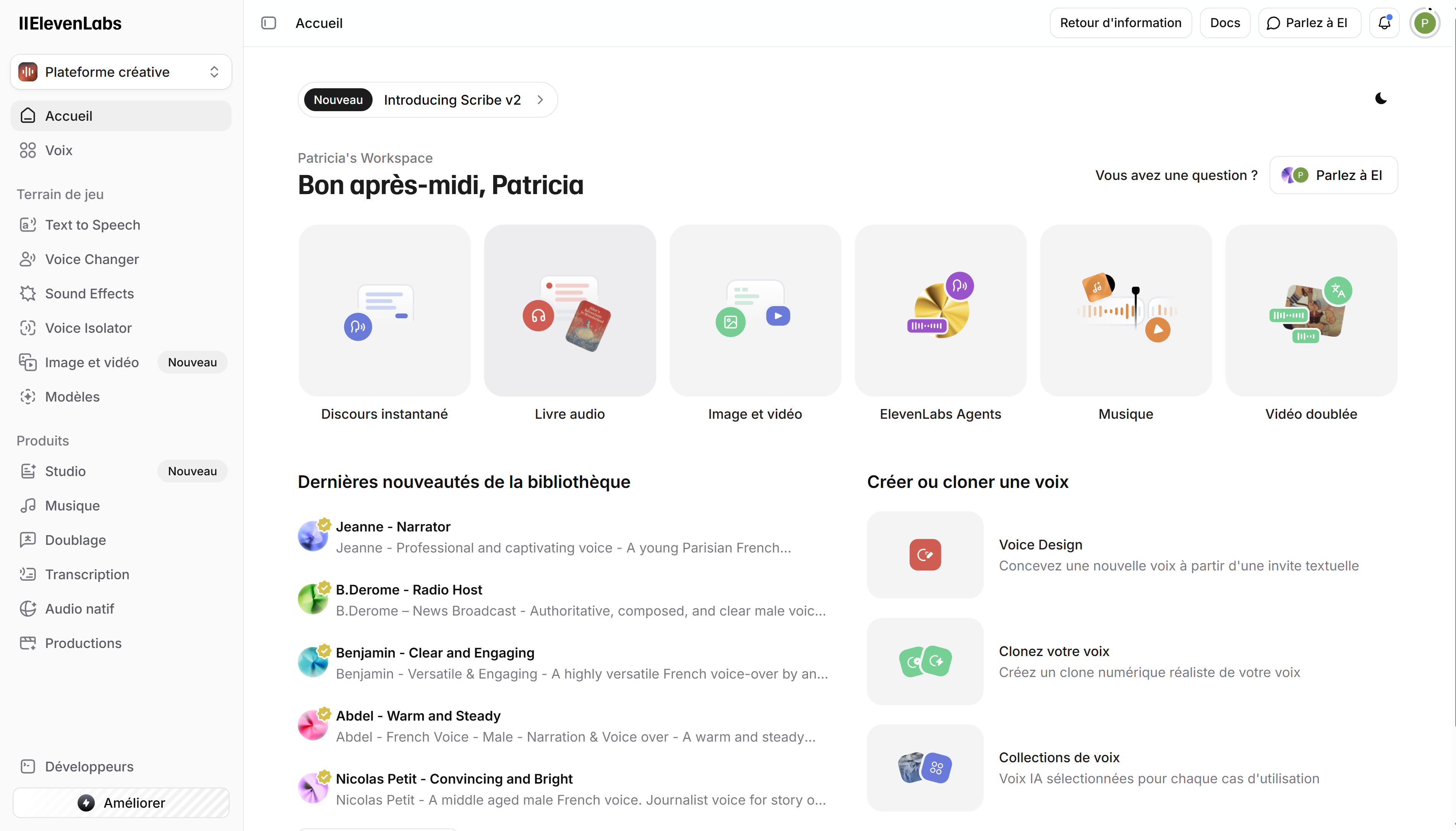

4) Projects: long‑form production without chaos

Projects is where ElevenLabs becomes a production tool instead of a generator. The highest‑leverage habit is to write in reviewable blocks:

- Intro → core points → examples → recap → CTA.

- One idea per block, with a clear intention note (tone, emphasis, pause).

- Re‑render only the blocks that changed.

5) AI Dubbing: keep timing and meaning intact

The safest dubbing pipeline is: clean subtitles → dub → review → deliver. If you have SRT/VTT, import them so you start from accurate segmentation and timing. Then:

- Review the first minute before dubbing everything (names, numbers, tone).

- Keep terminology consistent with a shared glossary across languages.

- Deliver with captions so editors can keep perfect sync.

6) Sound Effects: subtle finishing that reads “premium”

Most voice tracks feel unfinished because nothing frames them. Use Sound Effects sparingly:

- Beds: soft ambience under intro/outro.

- Transitions: short risers or whooshes between sections.

- UI cues (product voice): gentle pings and confirmations that don’t mask speech.

Keep effects quiet (especially for mobile speakers) and always prioritize intelligibility.

7) Safety & consent: your non‑negotiables

If you use voice cloning or anything close to a recognizable voice, treat consent like a contract: explicit permission, documented scope, and a clear ownership trail. For teams, define:

- Who can create or modify a voice.

- What channels and languages are allowed.

- How to handle take‑downs or revisions.

This is as important for trust as it is for production stability—unclear rights often force re‑recording at the worst time.

8) API overview: batch vs streaming

Most integrations fit one of two patterns:

- Batch for longer audio (courses, videos, podcasts).

- Streaming for short, fast responses (product guidance, assistants, IVR).

Start minimal: one voice, one endpoint, one prompt template. Add caching for repeated lines and keep the prompt style consistent so the voice doesn’t drift between screens.

9) Choosing a plan: pick for output, not for hope

- Test first to validate pronunciation and tone.

- Creators: choose capacity that matches weekly output.

- Teams: prioritize governance and predictable volume.

10) A pre‑publish checklist

- Scripts are split into short blocks.

- A glossary exists for names/acronyms.

- The first minute is approved (tone + pronunciation).

- Captions exported for video workflows.

- Effects are subtle and never mask speech.

- Consent is documented for any cloned voice.